Key points:

- AI is difficult to understand, and its future is even harder to predict

- Five strategies to prepare students for a future innovating with AI

- More college students say AI helps them earn better grades

- For more news on AI in higher ed, visit eCN’s Teaching & Learning hub

With the swift arrival of ChatGPT and other sophisticated artificial intelligence chatbots, AI is poised to transform higher education for better or worse. Some professors and students fear that it will ruin valuable educational experiences, such as the study of challenging texts–or even replace human beings in a range of careers. Others think that AI has great potential in education. Ethical and practical issues are being hotly debated on campuses.

AI is difficult to understand, and its future is even harder to predict. Whenever we face complex and uncertain change, we need mental models to make preliminary sense of what is happening.

So far, many of the models that people are using for AI are metaphors, referring to things that we understand better, such as talking birds, the printing press, a monster, conventional corporations, or the Industrial Revolution. Such metaphors are really shorthand for elaborate models that incorporate factual assumptions, predictions, and value-judgments. No one can be sure which model is wisest, but we should be forming explicit models so that we can share them with other people, test them against new information, and revise them accordingly.

“Forming models” may not be exactly how a group of Tufts undergraduates understood their task when they chose to hold discussions of AI in education, but they certainly believed that they should form and exchange ideas about this topic. For an hour, these students considered the implications of using AI as a research and educational tool, academic dishonesty, big tech companies, attempts to regulate AI, and related issues. They allowed us to observe and record their discussion, and we derived a visual model from what they said.

We present this model as a starting point for anyone else’s reflections on AI in education. The Tufts students are not necessarily representative of college students in general, nor are they exceptionally expert on AI. But they are thoughtful people active in higher education who can help others to enter a critical conversation.

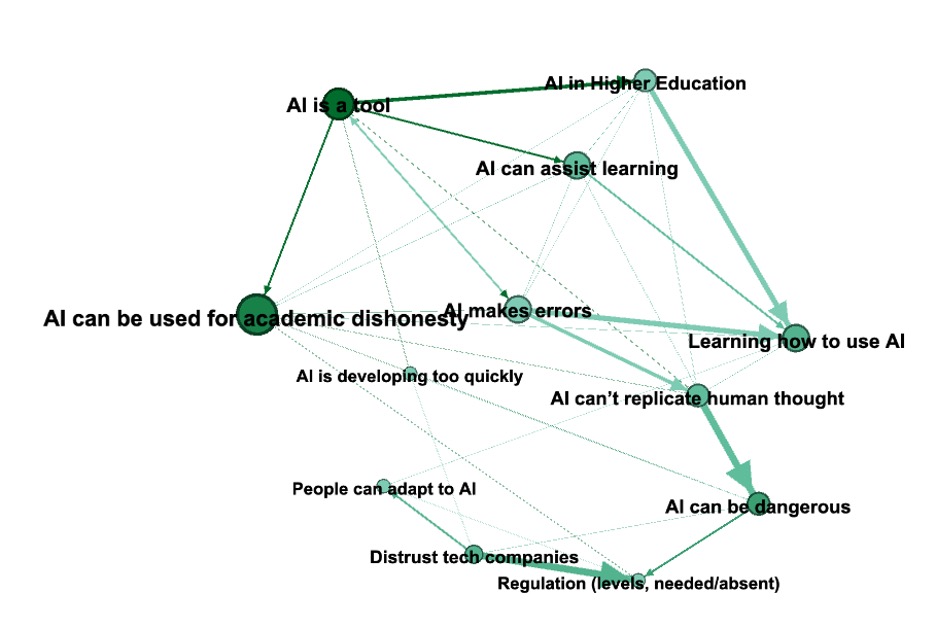

Our method for deriving a diagram from their discussion is unusual and requires an explanation. In almost every comment that a student made, at least two ideas were linked together. For instance, one student said: “If not regulated correctly, AI tools might lead students to abuse the technology in dishonest ways.” We interpret that comment as a link between two ideas: lack of regulation and academic dishonesty. When the three of us analyzed their whole conversation, we found 32 such ideas and 175 connections among them.

The graphic shows the 12 ideas that were most commonly mentioned and linked to others. The size of each dot reflects the number of times each idea was linked to another. The direction of the arrow indicated which factor caused or explained another.

Coding their discussion in this way, we uncovered the areas of interest, and at times concern, of student participants. Participants were keen to discuss how AI can be used as a tool, noting that it can be both a tool to assist learning and one that could help students violate academic integrity. Students were careful to stipulate how imperfect AI is in its current state, frequently connecting other thoughts to the fact that AI makes errors. For example, one student noted that AIs are sometimes “just regurgitating knowledge that they might not even have,” resulting in incorrect statements. This concern mirrors some faculty concerns that others have highlighted, such as the results of a recent Educause survey which points to worries about academic integrity, as well as a general lack of knowledge about AI use. However, the students also stated that AI could be useful if used properly, such as when they were “really stuck on a couple of sentences…bouncing some ideas off ChatGPT…was really useful for me.”

On the cynical side of the conversation, students were wary of tech companies and tended to point to increased regulation of said companies as a prescription for corporate control of AI. For example, one student pointed out, “they’re [tech companies] trying to be…innovative, and being seen as the next thing, or making money, or whatever other motivations they might have, so I won’t necessarily trust them.” This view that these companies are mostly interested in profit, regardless of the potential consequences of AI’s development, was recurring among the students’ discussion. Likewise, the need for “top down regulation” was also voiced by students, with one stating that this would need to come from the government or an international organization, as they did not “trust the people running tech companies to regulate things properly on their own end.”

AI was also viewed as particularly dangerous for these students because it cannot replicate human behavior and emotions. Part of this concern was also for the potential jobs that could be replaced by AI–as a recent report from the IMF discussed, up to 60 percent of jobs could be affected by AI in one way or another. Likewise, recent discussions of the environmental impact of AI show that the potential effects of its use are more widespread than generally considered.

There is a line of conversation that looks to the “it” of AI, highlighting that although it seems to be developing too quickly, people can adapt to AI and therefore there is value in learning how to use it. Students noted that it was particularly helpful when given the opportunity to learn how to use AI ethically in the classroom; one stated that “there were like a number of professors in my classes that were taking the time to kind of like explore [ChatGPT], pointing out…I don’t want you like writing your papers with this, and here’s why…But let’s think about what this can actually be used for…And so, having those conversations were useful over time.” By taking time to discuss potential uses for it in their work, some professors have helped students learn the ethical uses of the technology.

Undergraduate students are experiencing the early stages of sophisticated AI becoming integrated into higher education, used by administrators, educators, and students. As the students who participated in the discussion pointed out, and as the model above shows, while AI in higher ed has its flaws, it must be understood better so that those using it can do so ethically. These students provided some idea of how undergraduates have begun to use and view AI. Therefore, they offer a personal and important perspective that those inside and outside of higher ed can learn from.

- Students are testing out the FAFSA before it goes live in an effort to avoid last year’s mess - November 1, 2024

- Bridging the financial literacy gap for Gen Z - October 28, 2024

- The horror: Top traits of a horrible leader - October 24, 2024